Actuation

Baxter Conductor Motion

Setup

To set up the execution of our Baxter motions, we use Baxter’s joint trajectory controller and start an action server with “rosrun baxter_interface joint_trajectory_action_server.py”. To set up the planning of our motions, we start MoveIt with “roslaunch baxter_conductor_moveit_config demo_baxter.launch”. Here, MoveIt is configured using our modified kinematics.yaml and joint_limits.yaml to use the TRAC-IK solver and Baxter joint velocity limits. This starts the move_group node which communicates with the JointTrajectoryActionServer to actuate the motors given a planned trajectory. The use of an action server allows us to cancel a plan during execution, which is important for timing.

Getting Goal Position Specifics

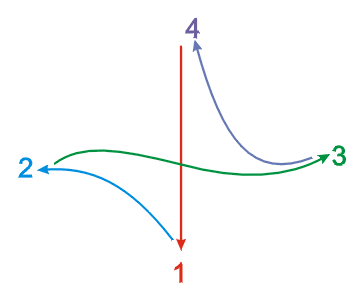

For conducting, there is a set of positions that the hands/arms are in at certain timings of the music in order to give visual cues to the musicians. For the right arm, these are the beats for a given time signature. For the left arm, in addition to the beats, there are additional movements for controlling the loudness/dynamics of the music. Finally, there is an ending motion to coordinate the stop of the music.

These positions were recreated by hand on the Baxter robot, and a helper function save_joint_vals.py was used in order to name and save the joint positions as text files in the motion/positions/ folder. These positions were recorded for each arm individually, so different sequences of left and right arm motions were supported.

Care was taken with setting each joint so that each connecting motion would have efficient joint movements, and pose symmetrical for both arms when applicable.

Execution and Timing

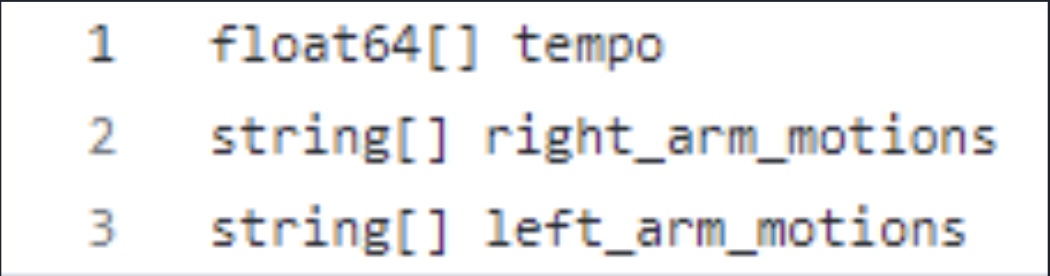

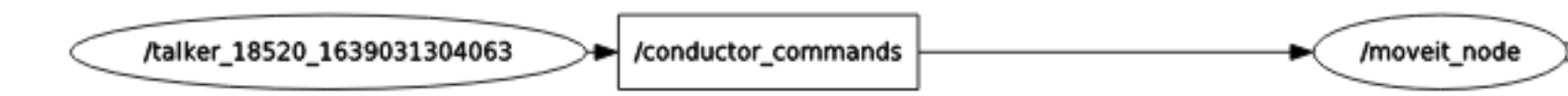

Execution of the robot commands sent by the music reader is done by the function conductor_motion. This node subscribes to the conductor_commands topic and executes the motions given in music_commands messages with music_commands.tempo setting the time allocated to each motion.

The planner is initialized and we iterate through the list of motions given for each arm. At each step, the joint position indicated by the motion name is retrieved from a text file stored in motion/positions/. The joint positions for each arm are combined to form a goal joint position for both arms, which is input into the planner, and a plan is output.

With this plan, we use the execute function in MoveGroupCommander with synchronous execution (wait = False) so that we can run a timer and stop the execution when the time allocated by tempo has passed. From wherever the arm stopped, a plan for the next step was calculated. This results in a compact right arm beat pattern when tempo is increased.